| Archive Blog Cast Forum RSS Books! Poll Results About Search Fan Art Podcast More Stuff Random |

|

Classic comic reruns every day

|

1 Simon: Tally ho! All unshrunk! Sorry we're late. Had an awful kerfuffle with some hippos on the Blue Nile.

2 Terry: Dr Simon Beard? You told Jane it would take six weeks to get here.

3 Simon: Yes, six weeks and nineteen minutes ago.

3 Terry: Isn't that a bit overly precise?

4 Simon: Nothing is too precise when the fate of humanity is on the line!

|

First (1) | Previous (3630) | Next (3632) || Latest Rerun (2895) |

Latest New (5380) First 5 | Previous 5 | Next 5 | Latest 5 Steve and Terry theme: First | Previous | Next | Latest || First 5 | Previous 5 | Next 5 | Latest 5 This strip's permanent URL: http://www.irregularwebcomic.net/3631.html

Annotations off: turn on

Annotations on: turn off

|

I thought and thought and thought about how to show the unshrinking...

and eventually decided to brush it under the carpet.

Apparently the unshrinking device looks like a giant magnifying glass.

Another thing to get out of the way is that this topic has also been tackled by the webcomic Saturday Morning Breakfast Cereal. No doubt many of you will have seen this already, though I personally found it to be still rather cryptic and unenlightening, except to tell me what quantum computers don't do - namely, "trying all the answers in parallel". Okay, so if that's not what quantum computers are about, what are they doing?

Firstly, let's review how digital computers work. These are the computers we use every day, and you're using one right now to read this (unless you're reading a print-out). One of the most fundamental things a computer needs to do is to represent data, or information, in such a way that it can be stored, manipulated, and read out in a way that is meaningful for human users. Computers do this by rendering data into patterns of bits, which is short for binary digits.

A bit is essentially either the digit 0 or the digit 1. A bit may be represented in other ways as well, such as heads or tails; or positive or negative; or on or off. The important thing is that there are exactly two choices of symbol or state to represent the bit. This is why it's called binary digit - because it represents one of two binary states. The word comes from the Latin binarius, meaning "consisting of two", and the bi- prefix can be seen in other English words relating to the number two, such as bicycle (two wheels), binocular (having two eyes), or biped (walking on two feet).

A binary digit is different from the usual decimal digits we use for counting: 0, 1, 2, 3, 4, 5, 6, 7, 8, 9. "Decimal" means related to the number 10, and you can see there are ten different digits. But with these ten digits, we can represent numbers of any magnitude by using a positional or place-value system. When we write 752, we mean the number formed by adding 2 (the rightmost digit), plus 5 multiplied by 10, plus 7 multiplied by 102. As we move positional places to the left, each digit represents the number equal to itself multiplied by a power of the base (or radix) of the number system, in this case 10. The first digit on the right is the number of ones, the next digit left of it is the number of tens, the next is the number of hundreds, followed the number of thousands and so on. We are all familiar with reading decimal numbers in this way.

In a binary representation, you do the same thing, except that the base of the system is 2, because there are two different digits. So let's read the binary number 1001101. The first binary digit (or bit) on the right represents the number of ones (1), the next number to the left represents the number of twos (0), then the number of fours (1), the number of eights (1), the number of sixteens (0), the number of 32s (0), and finally in this number the number of 64s (1). Adding these up: 1 + 4 + 8 + 64 = 77. So the binary number 1001101 is equal to the decimal number 77.

Why am I going into such detail on this point? Because it's important to understand that bits can represent much more than just the 0s and 1s they are made of. Imagine if we want to represent the entire contents of a book. What if we wanted to represent this in decimal notation, using just the digits 0 to 9? Well, we could convert each letter of the alphabet to a number, from A = 1 to Z = 26. We could even add some extra numbers to represent punctuation symbols, and lower case letters if we want. Then we could write out the entire book in numbers, by using the code numbers for each letter or symbol. You'd end up with a book full of digits, which a human couldn't read, but importantly the information in the book is still there, because you can decode the numbers back into letters and punctuation, reproducing it.

From here it's just a tiny step to encoding your book in bits, rather than decimal digits. Just assign each letter and punctuation symbol a binary number code, such as A = 1000001 to Z = 1011010, and record those[1]. You end up with a whole bunch of 0s and 1s and nothing else, but again, all the contents of the book are there, and just need to be decoded back into English for someone to read.

From here it's a relatively small step from encoding English text to storing other sorts of information as well. Images can be represented by numbers which give the brightness levels of various colours in each pixel, and audio can be represented by numbers giving the amplitudes of sound waves at different times, and movies can be represented by combinations of both of those things. It's not quite this straightforward, as we've come up with cleverer ways to represent these sorts of data that reduce the quite large amounts of storage needed. But in general, this is how computers encode the data that we use and manipulate every day - all as sequences of bits.

So much for representing data. A computer also has to physically store the bits it uses somehow. There are several ways it can do this:

The next thing a computer needs to do is manipulate the bits it is storing, to carry out calculations on them. This can be done electronically using logic gates. Conceptually, a logic gate takes one or two bits as input, and produces a bit as output which depends on the input bits. There's a lot that can be said about how these work at the logical level, but for now let's just say that by combining logic gates you can get them to perform arithmetic on binary encoded numbers, and at higher conceptual levels they can perform operations such as word processing, image processing, and so on - all the things we use computers to do. Jumping down a conceptual level, logic gates are implemented as electronic circuits which change in output voltage according to the voltages of their inputs.

Okay, that's a very quick overview of digital computers. Now let's talk about quantum computers, and where they differ from digital computers. And the differences start right at the very fundamental point of how they represent data.

A quantum computer doesn't use bits. It doesn't represent data using individual pieces of information that hold one of two possible symbols or states. The fundamental unit of data in a quantum computer is not a bit - it is a very different thing called a quantum bit, or qubit for short. The "bit" part of the name is carried over merely by analogy to the "fundamental unit of data" of digital computing, and the "bi-" part no longer indicates an etymological meaning of "two". Qubits do not have just two possible states; they are not really binary at all.

So what is a qubit? Let's imagine we want to encode a regular digital bit, which has two possible states, using some other physical system that, at least naively, has two possible states. I'm going to choose the polarisation of light. I've written a whole other annotation about polarisation, and it might be useful to review that material before continuing if you don't remember much about polarisation.

Now, I'm going to encode my bit of data in the polarisation of a light beam, such that to represent a 0 the beam will be polarised with left-handed circular (LHC) polarisation, and to represent a 1 the beam will be polarised with right-handed circular (RHC) polarisation. Sound reasonable? But wait, some of you are saying, what if the beam is linearly polarised? Or it's elliptically polarised? Or it's not polarised at all? What value do any of those represent? Good question!

Let's simplify things by talking about a single photon of light, rather than a beam of many photons. A photon, as it happens, can have two different measured polarisation states: LHC, or RHC. By no coincidence, these neatly align with the values I chose to represent 0 or 1. Okay. In a classical physics world, we'd be done, and we could go and design a digital computer that uses photons of light to encode bits in their polarisation.

But photons are not described by classical physics. Photons require the use of quantum physics to fully describe their behaviour. This means that rather than merely being in one of either the LHC or RHC states, a photon can be in a quantum mechanical superposition of these states. Without delving into the precise mathematical intricacies, we can imagine a photon as potentially being "half LHC and half RHC", or "1/4 LHC and 3/4 RHC", or any other combination that adds up to 1. However, when we measure the polarisation of a photon, we only ever see it as either LHC or RHC. We don't get a polarisation measurement of "half LHC and half RHC". What is going on?

The fractions in "half LHC and half RHC" are manifested as probabilities. Imagine we have a continuous stream of individual photons, all in the polarisation state "half LHC and half RHC". If we measure the polarisations of these photons, each individual photon will be seen to be either LHC or RHC... but we will see that approximately half the photons we measure read as LHC and the other half will read as RHC. Similarly, if we measure a stream of photons that are all in the same "1/4 LHC and 3/4 RHC" polarisation state, we will measure roughly 1/4 of them as LHC and 3/4 of them as RHC. In other words, if we measure the polarisation of a "half LHC and half RHC" photon, there is a 50% chance we'll measure it as LHC, and a 50% chance we'll measure it as RHC. And if we measure the polarisation of a "1/4 LHC and 3/4 RHC" photon, there is a 25% chance we'll measure it as LHC, and a 75% chance we'll measure it as RHC.

This may sound weird and counter-intuitive, because everyday objects we interact with don't show this sort of superposition of states and probabilistic behaviour. This is in fact the motivation behind the Schrödinger's cat thought experiment, which is an attempt to show how absurd quantum behaviour is if you think about it on a macro level. But at the level of fundamental particles, it's what happens, and we have plenty of experiments that demonstrate this superposed-states/probabilistic behaviour.

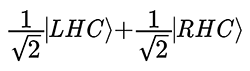

Going one level deeper into the mathematics, the probabilities we can measure - namely the halves in "half LHC and half RHC", or the 1/4 and 3/4 in "1/4 LHC and 3/4 RHC" - are actually the magnitudes of the squares of numbers called probability amplitudes in quantum mechanics. In other words, mathematically, the superposed state we've called "half LHC and half RHC" can more properly be written using the square root of a half, as:

(equation 1)

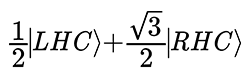

(equation 1)and the superposed state we've called "1/4 LHC and 3/4 RHC" can more properly be written as:

(equation 2)

(equation 2)In this form, the mathematical operations that govern the physics of the photons are more meaningful and useful. The thing to remember is that to produce the probabilities of measuring LHC or RHC, you need to square the given probability amplitudes and then take the magnitude of the resulting number.

But wait a second, you might say, if we're now squaring the probability amplitude 1/√ 2 to produce the observed probability 1/2, what's to say the amplitude isn't negative: -1/√ 2 , because when you square that it also gives 1/2? In fact, nothing stops this; the probability amplitudes can indeed be negative. In fact, because we take the magnitude of the square, the probability will work out even if the amplitude is an imaginary or complex number![2]

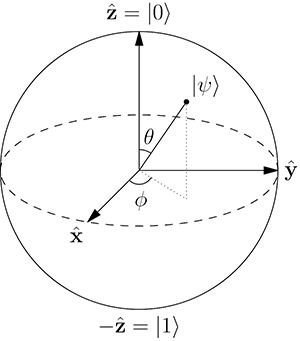

At this point, it might be useful to visualise the difference between a bit and a qubit using a diagram. This can be done by looking at the diagram known as the Bloch sphere, reproduced here as Figure 1.

Figure 1: Bloch sphere. (Creative Commons BY-SA by Glosser.ca from Wikimedia Commons.) |

The Bloch sphere shows graphically the difference between possible values of a bit and a qubit. The two possible values of a bit are written as |0⟩ and |1⟩, which can just be thought of as fancy quantum mechanics notation for 0 and 1. The value |0⟩ lies at the top of the sphere - the north pole if you will. The value |1⟩ lies directly opposite it at the bottom of the sphere - the south pole. For a bit, these are the only two possible data values it can contain.

For a qubit, the situation is very different. As we've seen above, a qubit can hold any value given by a sum of probability amplitudes of |0⟩ and |1⟩. If the squares of the amplitudes add to give 1, as constrained by the probabilities of normal measurement, this restricts the location of the qubit values to the surface of the Bloch sphere. So:

In other words, we can't measure the superposed state of the qubit directly - we still only read out a 0 or a 1. But the superposed state is there before we measure it, and it can affect other things, and be affected by other things - and this is the basis of quantum computing.

In a nutshell: Quantum computing is operating on and manipulating the potentially superposed states of qubits, and then reading out the probabilistic values of the qubits at the end of the calculation.

How do you operate on qubits? You can't just use digital logic gates, as you would with regular bits. Instead you use physical interactions that affect the states of the physical components you are using to represent the qubits. Photon polarisations are only one possible way to do this, but you can also represent qubits with things such as the quantum spin of electrons, the nuclear spin of atomic nuclei, or other more esoteric quantum systems.[3] Whatever you use, such quantum systems exhibit a property known as quantum interference - which I don't want to go into in any detail here, but which essentially means that their probability amplitudes interact in ways which can affect the outcomes of measuring the system.[4]

So for example, if you get a bunch of electrons with their spins encoding data as qubits, then operating on some of the electrons by magnetic fields that could change their spin states can also affect the states of some of the other electrons not being directly manipulated. This is where my understanding, even after some extensive reading, peters out, so I'll stop there and take a few steps back to try to bring all this together.

The important thing about operating on qubits is that you can affect the probability amplitudes of all of your qubits, essentially in unison. If you're clever, you can hopefully design an algorithm that works to push the probability amplitudes of all of the qubits towards the correct answer that you're trying to compute, while reducing the probability amplitude of incorrect solutions. Such algorithms exist for some relatively simple problems - possibly the most interesting one is Shor's algorithm, which is a quantum algorithm designed for factorising integers. (I won't even pretend to understand how Shor's algorithm works - despite reading a description, I don't really grok it.)

Some quantum computing algorithms are deterministic, meaning they always give the correct answer. But others, which will usually include more interesting and complex algorithms - may only be probabilistic. This can come about by the nature of qubits. Imagine that in the correct answer, a particular qubit should read out as 1. But the best algorithm available may only be capable of setting that qubit to the superposed state given by equation 2. This means that 75% of the time, the bit will give the correct value when read out, but 25% of the time it will produce the incorrect value of 0.

This means quantum computers don't necessarily always give the correct answer! What use is that?

Well, imagine you have an algorithm that manipulates a bunch of qubits, and when the qubits are read out, they give you the correct answer just 50% of the time. For another 25% of the time, it gives incorrect answer A, and the other 25% of the time it gives you incorrect answer B. Well, you can run the algorithm on your quantum computer say 100 times. On 48 of those runs it produces the number 1234, on 25 of the runs it gives the number 1238, and on the other 27 runs it gives the answer 1247. What do you think is the correct answer? Right, it's almost certainly 1234. You may not be 100% convinced, but for many purposes you only need to be 99.999% convinced anyway. If you need more convincing, you can run it more times to build up the statistics.

Okay, so we've very roughly covered what a quantum computer is and how it works. Why would you want to use a quantum computer? The answer is that some quantum algorithms can take advantage of the interference relations between qubits to significantly speed up some calculations that take a prohibitively long time in digital computers.

Factorising integers (what Shor's algorithm does), is a notoriously time-intensive problem for standard digital computers. Once the intergers get large enough - and we're talking a few hundred digits here, not billions or anything - even the fastest digital supercomputers we have would take thousands of years of calculating to factorise them. This fact is used in many cryptography applications, where you can generate a public cryptographic key by multiplying two large numbers (which is easy), but to break the encryption without the original numbers you need to factorise the key, which is effectively impossible with current technology.

However, a quantum algorithm, successfully implemented, could factorise large numbers like those used in modern cryptography in a very short amount of time. This is the power of quantum computing. A working quantum computer with enough qubits could break every encryption currently in use. Besides rendering encryption obsolete, there are many other practical uses for quantum algorithms, particularly in fields with many combinatorial interactions, such as DNA analysis, or indeed in studying quantum physics itself.

So what's the state of the art? Well, quantum computers are hard to build. Even building a single qubit is difficult. It needs to be an isolated quantum mechanical system, which basically means it has to be something like an elementary particle or an atomic nucleus, or something of that scale. To build such a thing, you often need large machines that generate precise magnetic fields, or to do the whole thing at cryogenic temperatures, or to isolate the thing in a vibration-proof room, or all of the above. You can't just stick a qubit into a silicon chip that plugs into your computer - not yet anyway.

So far, researchers have built arrays of just a few qubits, 4 or 8 of them. Shor's algorithm has been demonstrated to work on such a qubit array, but so far the highest number successfully factorised is just 21. The Canadian company D-Wave Systems manufactures and sells what it claims are quantum computers with around 1000 qubits, though there has been much scepticism in quantum computing circles that their machines are actually quantum computers as claimed. There seems to be some sort of quantum behaviour, and they show some speed-up over normal computers for some algorithms, but it's not yet entirely clear what is going on with them.

And that's where we are with quantum computing. It's at about the stage of technology that aeroplanes were in the years immediately after the Wright Brothers flew at Kitty Hawk. The very first crude attempts have been shown to work, and now we're trying to improve the technology in terms of capability and reliability. How fast it takes off from here... is anyone's guess at the moment.

[2] I talk about imaginary and complex numbers a bit here, if you need a refresher.

[3] You can see a larger list of possible physical qubit systems listed near the bottom of Wikipedia's qubit article.

[4] In terms of the Bloch sphere, I think this means that some of the qubits can even end up in states represented by points inside the sphere, rather than just on the surface - though honestly I'm a little fuzzy myself on this point, so don't take that as gospel.

|

LEGO® is a registered trademark of the LEGO Group of companies,

which does not sponsor, authorise, or endorse this site. This material is presented in accordance with the LEGO® Fair Play Guidelines. |